SUMMER 2020 ROBOT

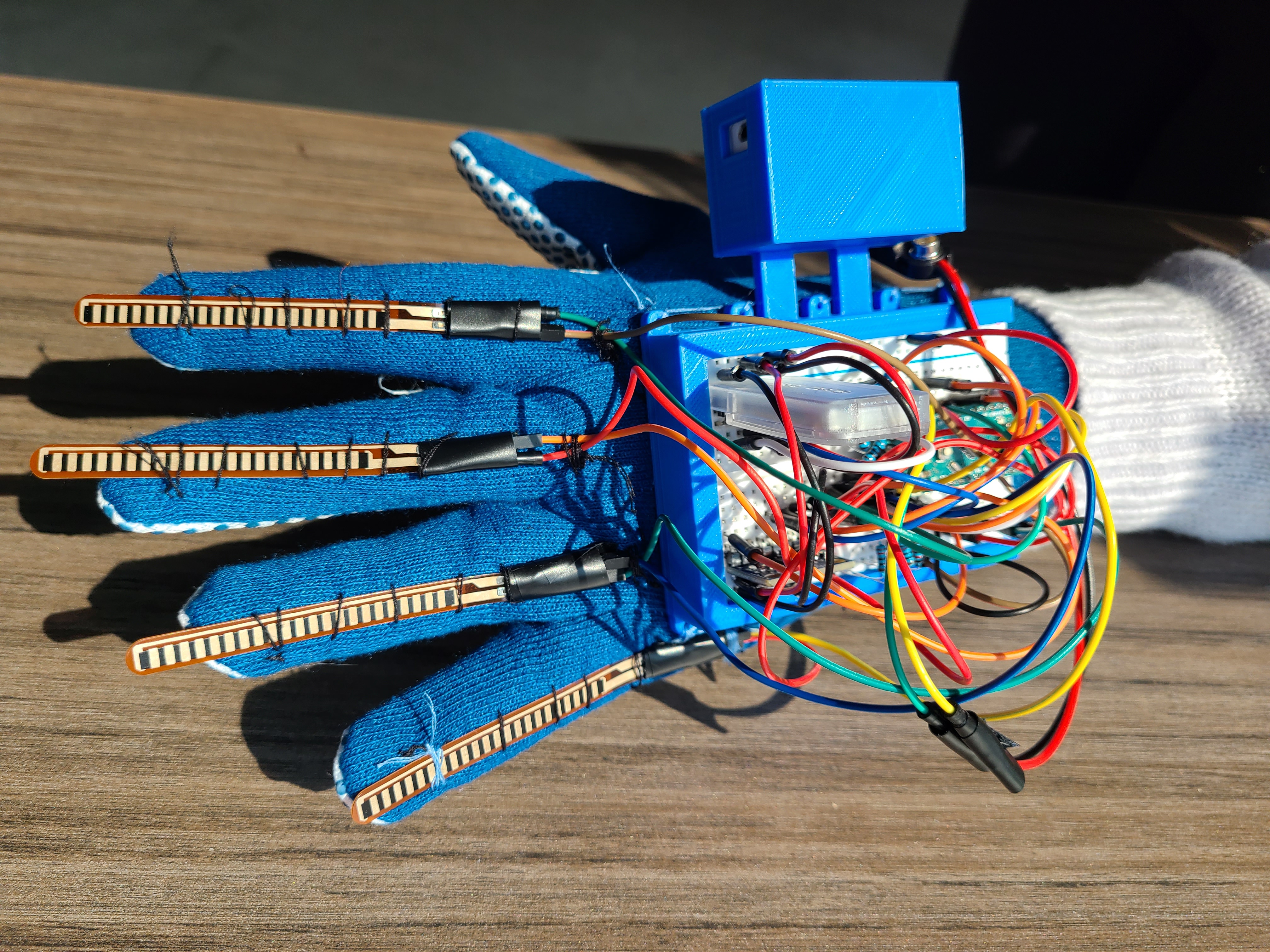

Glove

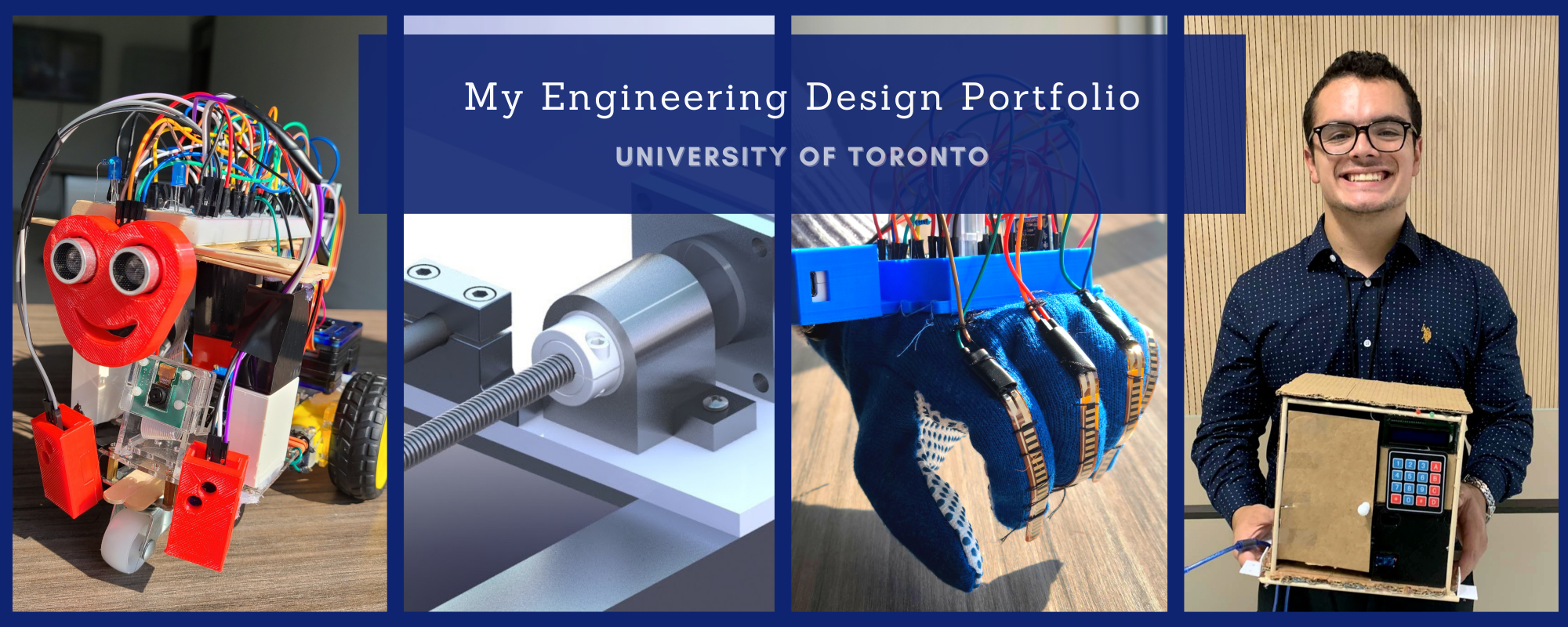

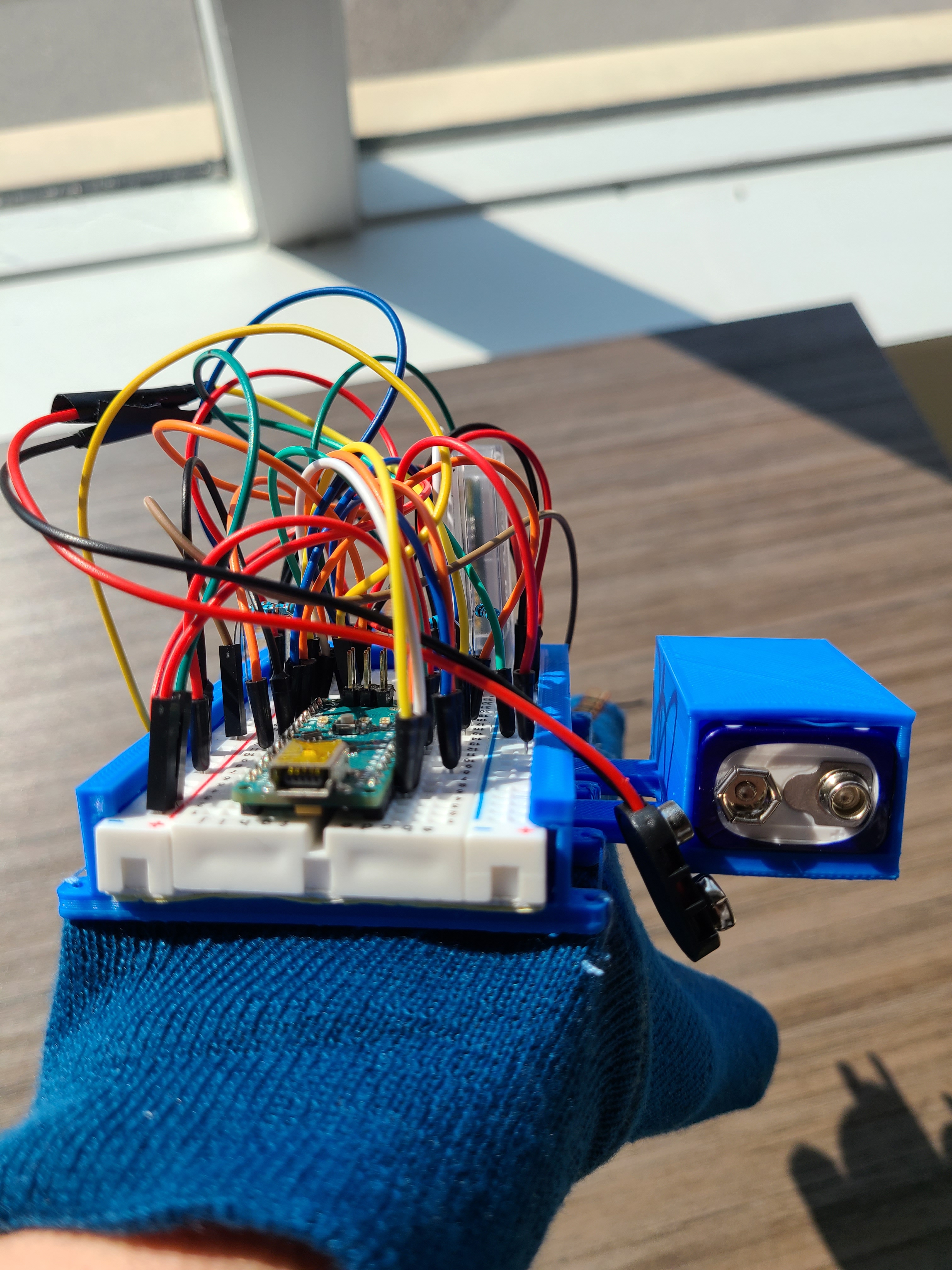

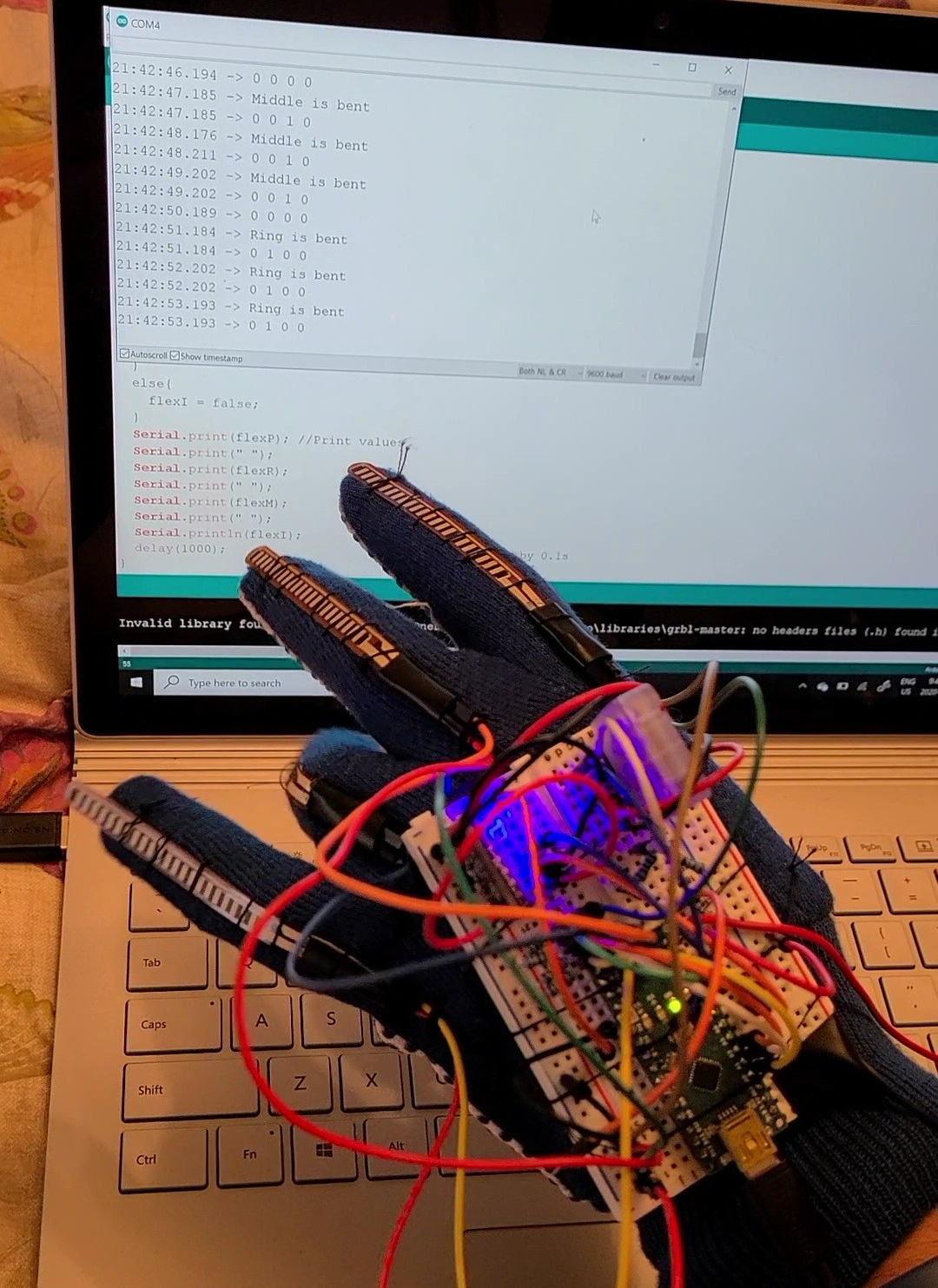

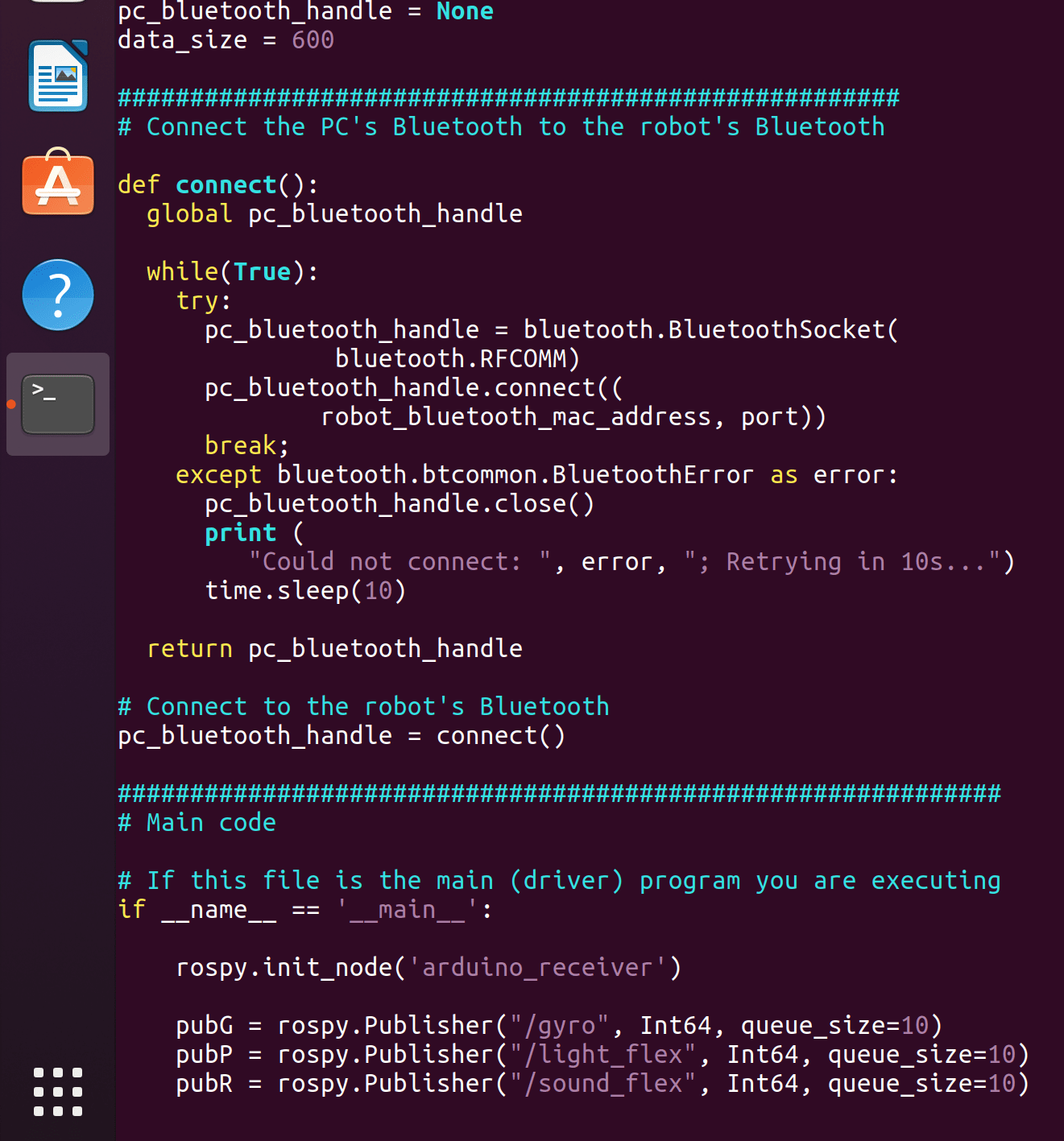

The primary goal of the glove was to provide a simple interface for a user to control the glove without much prior knowledge. By measuring the tilt of the hand, the commands are sent for how the robot should move. To further customize the interface, the simple bending of each finger has an associated command: the pinky activates the lights; the ring finger sounds the horn; the middle finger activates the autodrive mode that navigates around a room while avoiding obstacles; and the index finger commands the robot to follow a pre-determined path on the floor by following a complex black line. After measuring the user’s movements, these commands are processed by an Arduino and sent to the PC via Bluetooth. To hold all the components, we 3D printed a custom model to keep everything secure.

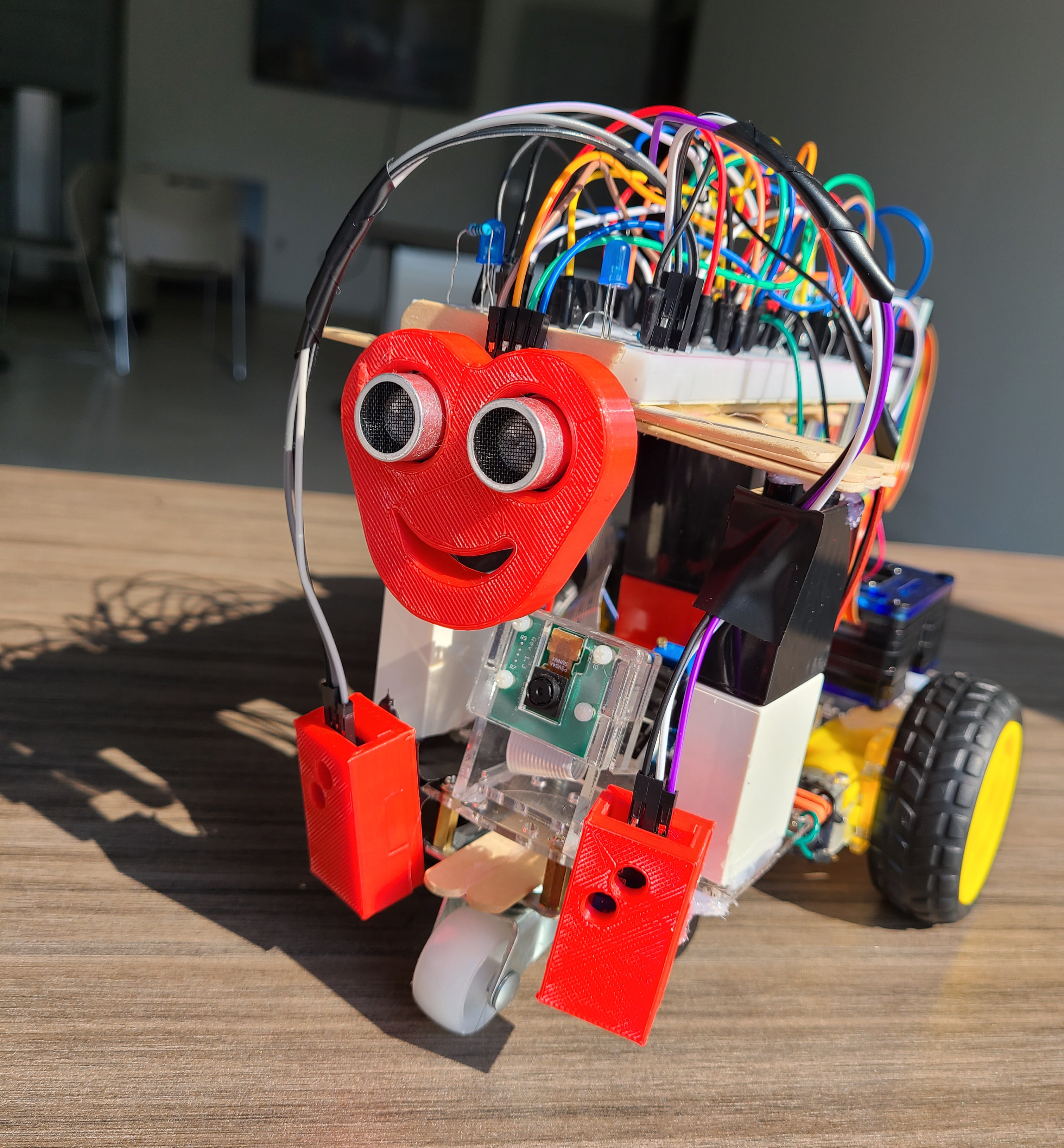

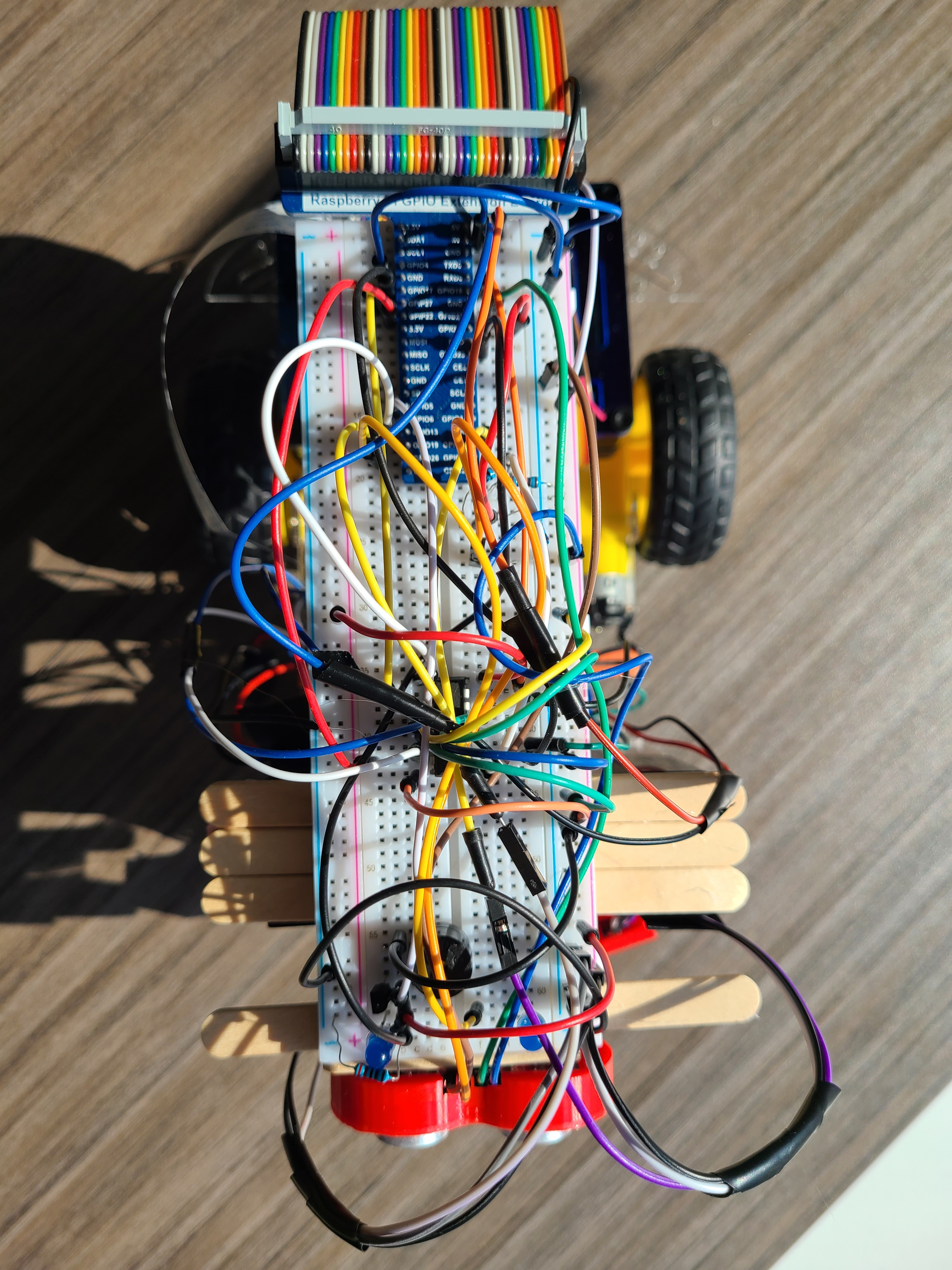

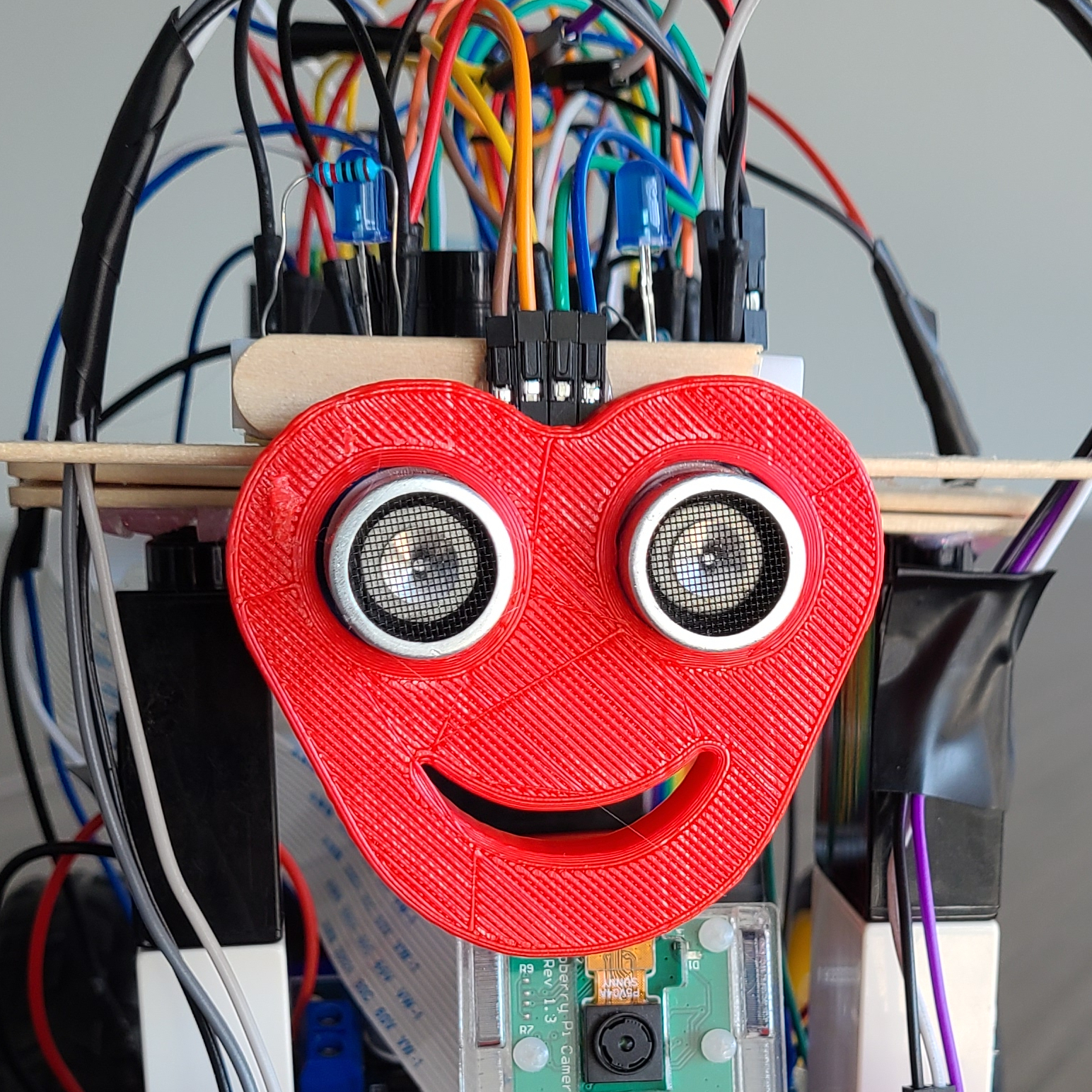

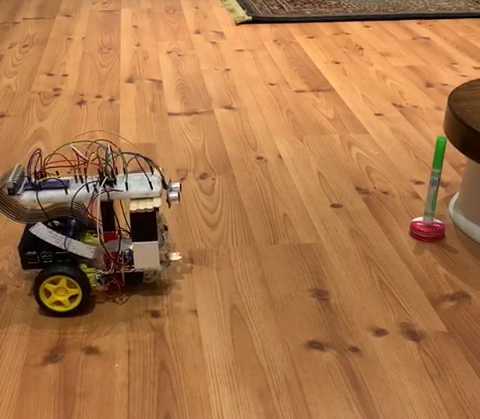

CAR

The brains of the vehicle lie within the onboard Raspberry Pi which acts as a small computer. Connected to it are a wide assortment of sensors and outputs such as: the motors, an 8 MP camera, an ultrasonic sensor module, a pair of infrared sensors, a pair of lights, and a loud horn. Due to the relative small computational power of the Pi, it acts as a means of sending the sensor values to the remote PC and receiving the corresponding commands.

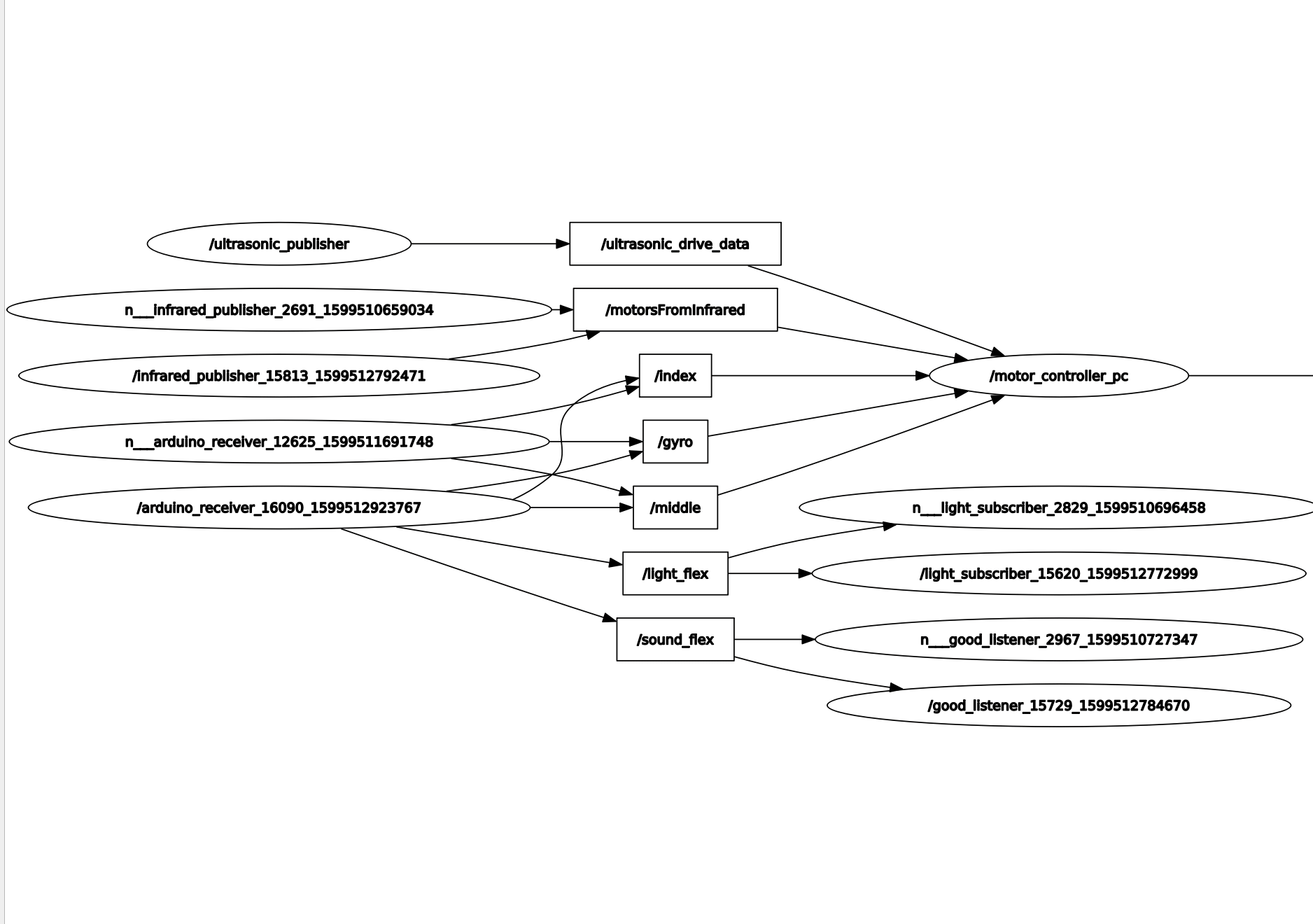

ROS

With so many components communicating wirelessly between the glove, PC, and car, it was necessary to create a common network of communication for each sensor’s readings and the corresponding commands for the different outputs. This was made possible through a series of nodes that are constantly in communication in the Robotic Operating System (ROS). The Master Node exists in the PC and this is where all the information is redirected and processed simultaneously to output the appropriate commands. Please read in the section for more details on the specific coding.

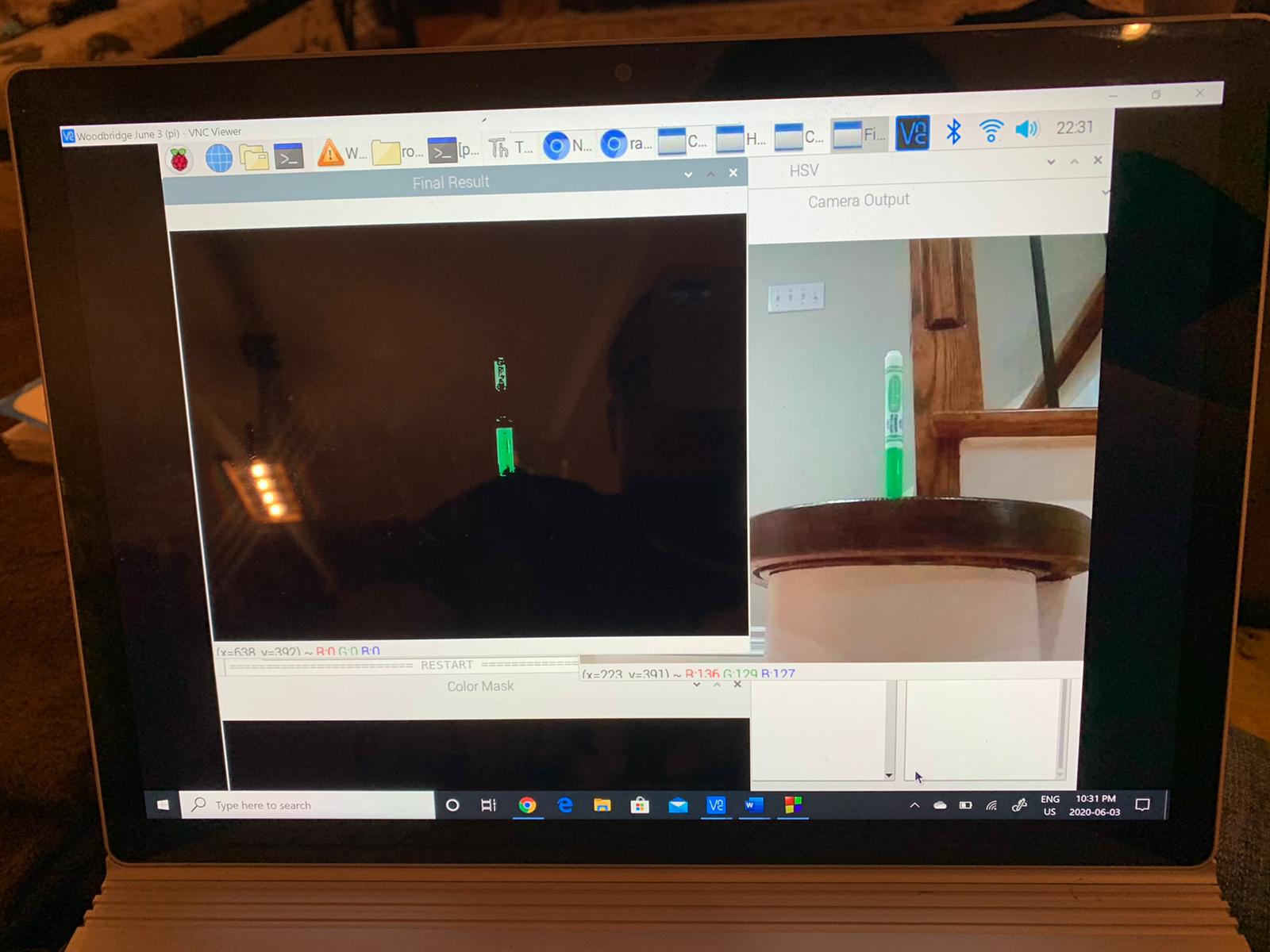

Opencv

Currently, we have a successful running program that when run, allows the robot to autonomously search for a specific colour hue. When this is detected by the camera, the Raspberry Pi processes the image using OpenCV in a Python3 script and sends a command to the motors to follow the colour until it is within 5cms of it. This program has not yet been configured to be activated through the glove, but it will be added upon future iterations of the project.

My classmate and I are very proud of the progress that we have made on this project, but by no means does that indicate that it is over. Rather, we will be making further improvements to the functionality and possibly adding new sensors such as a LIDAR. As mentioned in the OpenCV section, we plan to further integrate computer vision into our robot.